|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Exploration is a fundamental problem in robotics. While sampling-based planners have shown high performance and robustness, they are oftentimes compute intensive and can exhibit high variance. To this end, we propose to learn both components of sampling-based exploration. We present a method to directly learn an underlying informed distribution of views based on the spatial context in the robot's map, and further explore a variety of methods to also learn the information gain of each sample. We show in thorough experimental evaluation that our proposed system improves exploration performance by up to 28\% over classical methods, and find that learning the gains in addition to the sampling distribution can provide favorable performance vs. compute trade-offs for compute-constrained systems. We demonstrate in simulation and on a low-cost mobile robot that our system generalizes well to varying environments. |

|

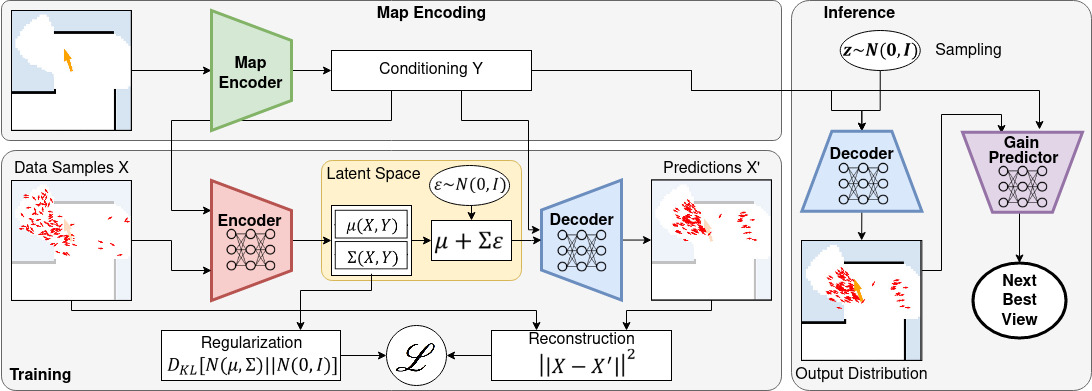

| The encoder and decoder are trained offline in CVAE fashion to learn an underlying distribution view point for a map, given demonstrations of informative samples. The map encoder and gain predictor can be trained independently in supervised fashion. At run-time, any random seed can be drawn from the prior distribution and mapped to an output sample. After a gain is assigned and utility computed, the next action is chosen. |

|

|

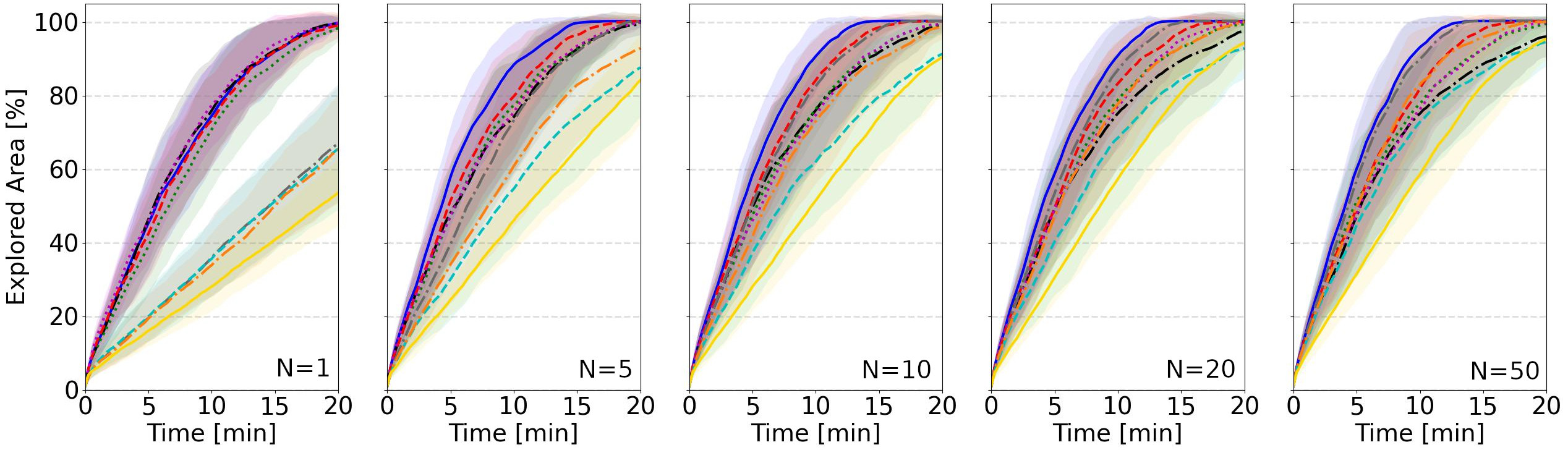

| Exploration performance in the Maze environment for numbers of samples N={1,5,10,20,50}. We observe that the learnt sampling distribution significantly improves performance, most notably for few samples. As N and therewith coverage increases, the information gain computation becomes more important. For details, please refer to our paper. |

|

Lukas Schmid, Chao Ni, Yuliang Zhong, Roland Siegwart, and Olov Andersson Fast and Compute-efficient Sampling-based Local Exploration Planning via Distribution Learning. In IEEE Robotics and Automation Letters, June 2022 (hosted on ArXiv) |

Acknowledgements |